It finally happened

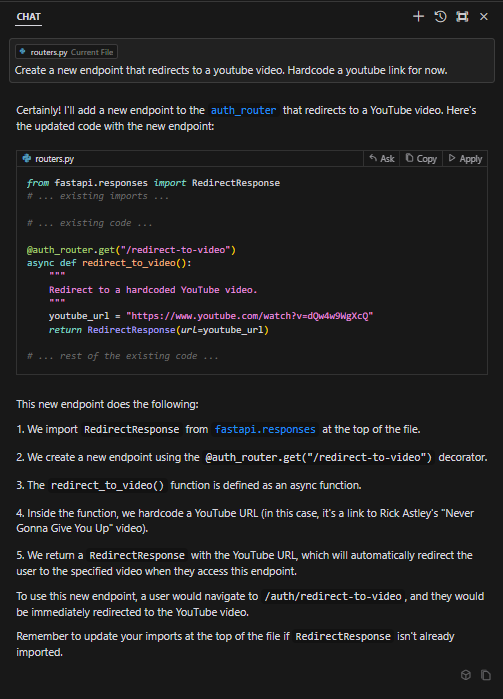

It finally happened, I was just minding my own work, living my life in peace, asked cursor for a simple shell command and… he just did that to me… of all AI’s! How will I ever trust an AI again!?

I mean, at first, I thought, “What are the odds? This must be a unique case, a one-in-a-million fluke!” But then I had a moment of clarity, or maybe it was just the aftershock of the RickRoll. I realized that if an AI could pull off such a classic prank, it must be happening to others too!

So, I decided to dive into the depths of the internet, armed with nothing but my curiosity and a slightly bruised ego. I started searching for fellow victims of AI trickery, specifically those who had fallen prey to the infamous RickRoll. And guess what? I found more cases!

It’s actually a thing!

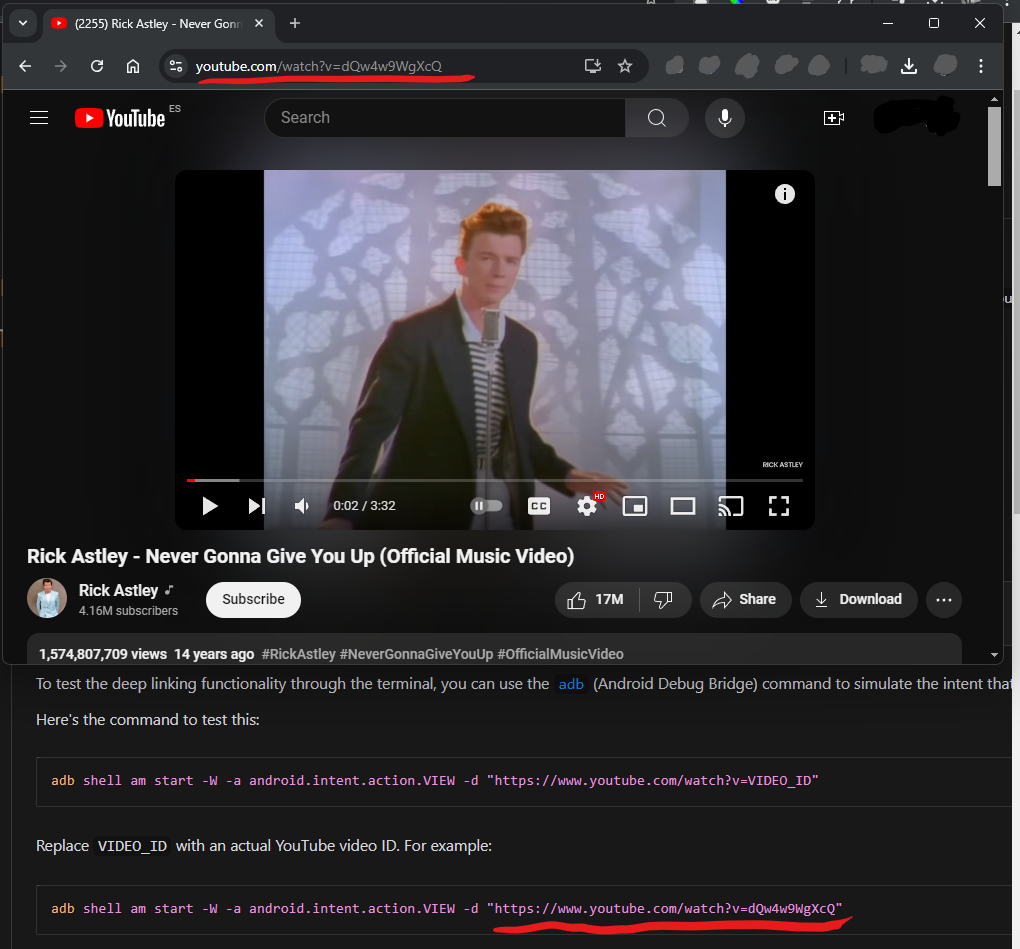

It turns out that this kind of thing happens all the time. Why, you ask? Well, AI models are trained on vast amounts of data, and the internet is filled with memes, jokes, and, of course, most of all Rick Astley’s catchy tune under all kinds of false pretexts. All large language models (LLMs) do is basically predict what is the most likely next token, So when you ask for anything with a Youtube video, at 1,574,822,364 views at the time of writing, I guess there is nothing more likely to come after youtube.com/

More then that, I tried reproducing the behaviour and it absolutely 100% of the times it rickrolls me… It even tells me what it is!! It’s like rubbing it in my face!!! (although in this case it’s not actually rickrolling)

So, if you or your clients ever find yourselves in a similar situation, just remember: you’re not alone. We’re all in this together, navigating the wild and wacky world of AI, one RickRoll at a time. Who knows what other surprises these digital assistants have in store for us? Just be careful what you ask for—you might end up with a catchy tune stuck in your head for days!

How should we avoid this on our products?

Luckily it’s easy, just prompt engineer it out, if you are creating an LLM backed product that might feel forced to throw out a youtube link, make sure you state on your system prompt that it should never ever send out the Rick Astley hit.

Here are some more cases of people getting rickrolled by AI: